Mert Kilickaya

Hello! I am an AI researcher focused on deep representation learning. I help to build agents that autonomously derive supervision from open-ended data streams and update themselves continuously.

I have contributed to self-supervised continual learning at the Learning to Learn Lab and earned my PhD in deep vision at the QUvA Lab under Arnold Smeulders.

News

Experience

Agendia, Amsterdam, Netherlands

ML Researcher

Learning to Learn Lab, Eindhoven University of Technology, Netherlands

ML Researcher

QUvA Deep Vision Lab, University of Amsterdam, Netherlands

PhD Researcher

Huawei Visual Search Lab, Helsinki, Finland

Research Scientist Intern

Research

Patents

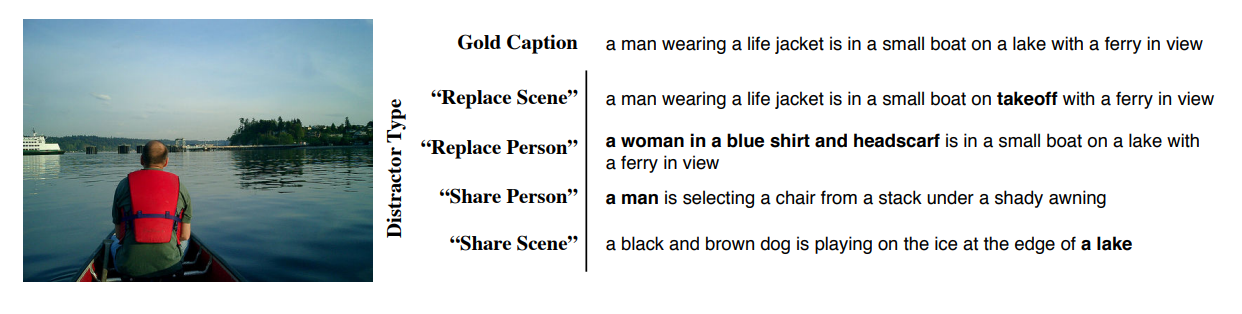

Visual Image Search via Conversational Interaction (Huawei)

Mert Kilickaya, Baiqiang XIA

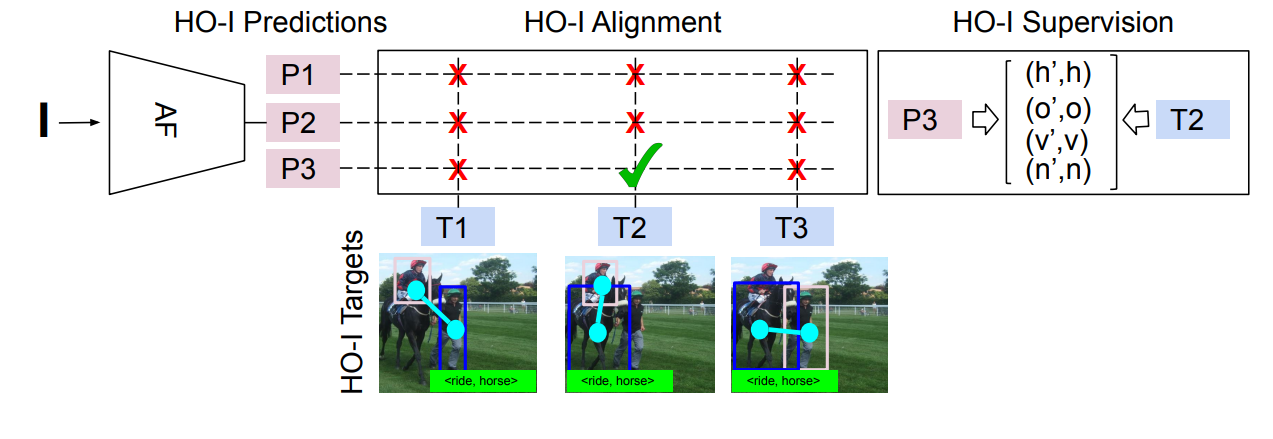

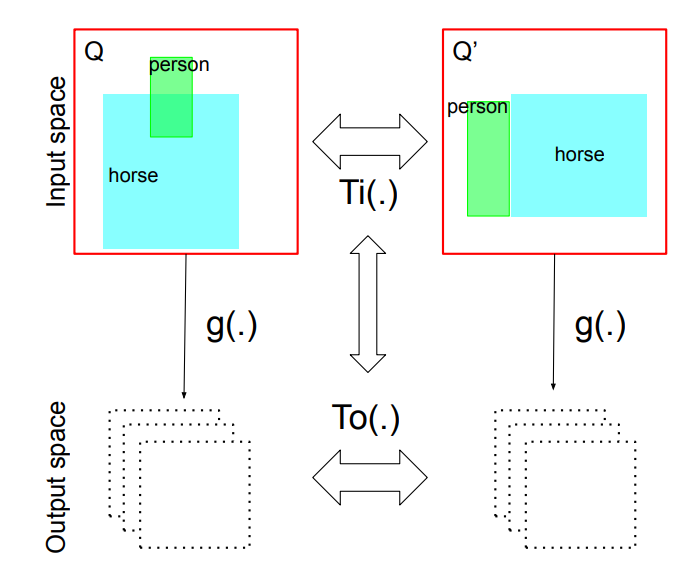

Network for Interacted Object Localization (Qualcomm)

Mert Kilickaya, Arnold Smeulders

Subject-Object Interaction Recognition Model (Qualcomm)

Mert Kilickaya, Stratis Gavves, Arnold Smeulders

Context-driven Learning of Human-object Interactions (Qualcomm)

Mert Kilickaya, Noureldien Hussein, Stratis Gavves, Arnold Smeulders

Supervision

I am very fortunate to have crossed paths with these talented individuals.

Fangqin Zhou (PhD, TU/e)

Tommie Kerssies (MSc, TU/e)